Statistics: 40% are made up!

Question Statistics

When you see a statistic, your first question should be…

“Where did they get that?“

The second question should be…

“How do I know that is accurate?“

Articles that provide statistics should provide a source and the source should provide a way to verify the statistic.

For example, where did my “40% of statistics are made up” statistic come from? I made it up! Now you know the source.

Here is a more difficult statistic to verify.

In 2019, Joe Kiani, Founder and Chairman of the Patient Safety Movement, said that 273,077 lives had been saved by the efforts of 4,710 hospitals and 89 healthcare technology companies signing an open data pledge. These lives had been saved since the first Patient Safety, Science & Technology Summit held in 2013.

However, by reading the press release, I couldn’t find a source of the statistics or a way to verify them.

Maybe he shared the source and methods at his talk, but they weren’t in the press release.

Next, I would ask, does this statistic make sense? How does he measure a “lives saved?”

There is no standard method across the USA for determining the number of lives lost due to medical errors, hospital-acquired infections, and errors in administering prescription medicine. Therefore, how would you know the exact number (273,077) of lives saved? Can you know the exact number of lives saved without knowing the number of lives lost?

Without an accurate measurement system, statistics are a guess. And a guess is not a good way to manage performance improvement efforts.

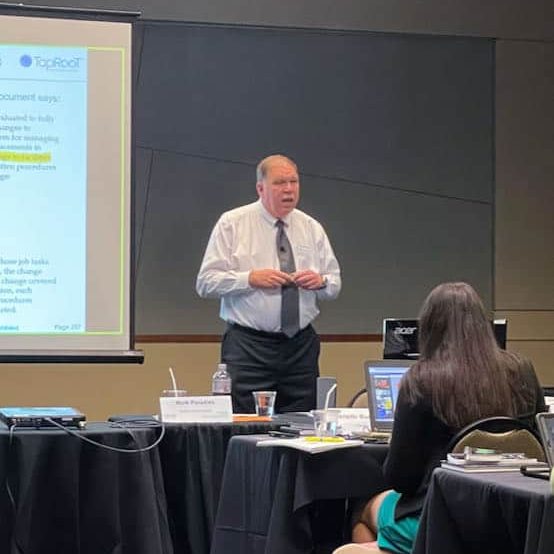

Learn Advanced Trending Techniques

Would you like to learn more about measuring performance and determining trends? Then I have two suggestions for you.

First, attend the 2-Day Measurement, Trending, and Predictive Analytics: How the Best Use Data Every Day to Improve Work Course taught by Kevin McManus and held at the Horseshoe Bay Resort near Austin, Texas on April 29-30.

Second, register for the upcoming Global TapRooT® Summit, which will be held May 1-3 at the Horseshoe Bay Resort, and attend the Using Trending to Manage Improvement session being presented by Mark Paradies (Wednesday, May 1st, at 2:40 pm).

CLICK HERE to register for the course and the Summit.

(This article was originally posted in 2019, but it has been revised and reposted here because the advice still applies.)

Here is his address: https://www.youtube.com/watch?v=HCmPnfn_Rto

He didn’t site the source of his statistics.

On the societies web site they say the “lives saved” numbers are voluntarily provided by their members.

I saw one member say that they calculated the lives saved at their facility by calculating a base rate of lives lost and then using the decrease in that rate to calculate the lives saved.

For all the talks at the Patient Safety Movement Summit, see: https://www.youtube.com/user/0x2020/videos

My college statistics professor used to say that statistics of random events such as radioactive decay are a pretty reliable indicator of future events, but statistics where interpretation of results are involved can be very misleading (sometimes purposefully).

An example he brought to class was that red cars are involved in significantly more high speed accidents than any other colored car. A deeper dive into the data revealed a significantly higher percentage of sports cars are red, and people who buy sports cars tend to drive faster than others, so the people who drive faster are in significantly more high speed accidents than others.

Buying a red station wagon did not change the probability of getting into a high speed accident one bit.

Somebody famous once said,” there are lies, damn lies and statistics”.

In the red car example 62% of those drivers had eaten French fries the day of the accident therefore the French fries must have had a relationship to the accident cause.

Statistics are the most “spun fact” there is.

Use them at your own peril.

And how does the Border Patrol how many “illegals” entered the country WITHOUT being caught, or the DEA statistics of how much drugs were smuggled into the country without being detected and confiscated

Or how many accidents are caused by drunk drivers when fewer than 5%(best estimates obtained by polling attendees at Accident Reconstruction conferences in the U.S.