Monday Accident & Lessons Learned: Is Automation the Solution to Human Error?

There are valuable lessons about automation and its implementation in the 737 Max crashes.

Here is a video from 60 Minutes Australia that explains some of the lessons. Try to ignore the sensationalism and blame and understand the technical details that are presented.

Is 60 Minutes always correct? No. They often sensationalize their news. They sometimes get the facts wrong.

But the lessons about automation and its implementation seem rather ominous. The automation was meant to PREVENT pilot error but instead, the automation seems to have caused an unrecoverable crash – or at least a very difficult to recover situation.

This isn’t just a Boeing problem. This is a modern automation problem. And the problem goes beyond aviation.

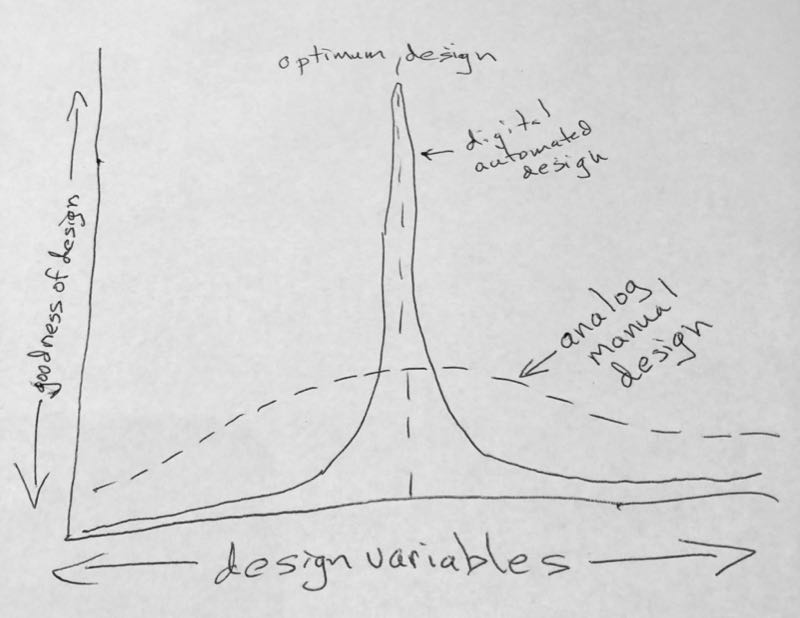

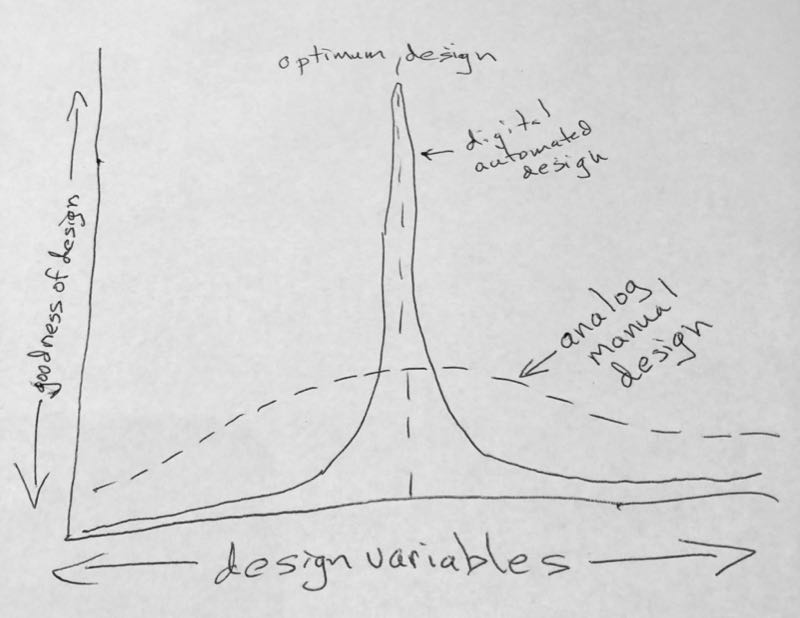

I remember having a conversation over lunch with human factors expert Jens Rassmussen. He described how much more difficult it is to design an automated system and the human factors interface than it is to design a manual control, non-digital control system. He drew a diagram on a napkin that showed that a good digital automation design could be 10 to 100 times better than an analog, manual control design. BUT, if the design strayed from the optimum design, the digital, automated design rapidly became much worse than the analog, manual control design. In other words, digital automation could fail much more dramatically than an analog manual control system when circumstances caused the design to be outside it’s intended “optimum” or if the designers didn’t achieve the optimum design.

This was over 30 years ago and he was talking about potential automation in the next generation of nuclear power plants. I was researching the proper role for automation and the difficulties that automation could cause. And I can remember the conversation like it was yesterday. I wish I still had the napkin but I’ll put my crude drawing of his diagram below…

The promise was that automated digital systems could be much better than the systems that we were used to. However, that design reward came at a price. The design had to be right on the optimum. If it missed the optimum by much, performance could be much worse.

My research also showed that operators often put too much trust in the automation. They believe it even when it was wrong. And they couldn’t understand why it was doing what it was doing fast enough to regain control when things went awry.

Finally, when automation becomes pervasive, the operators lose their skills and, eventually, can’t take control when they need to because they just don’t know how to manually control the advanced systems.

Therefore, all manufacturers, including aircraft manufacturers and:

- auto and truck manufacturers

- manufacturers of ship control systems

- nuclear power plant designers

- electrical transmission and distribution control system designers

- chemical plant and refinery automation designers

need to take a serious look at their human factors designs and their design review and testing processes when implementing advanced digital designs with artificial intelligence and automation.

That is at least one lesson that I think will come out of the investigation of these accidents.